-

Notifications

You must be signed in to change notification settings - Fork 107

Enable strict mode in js #143

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

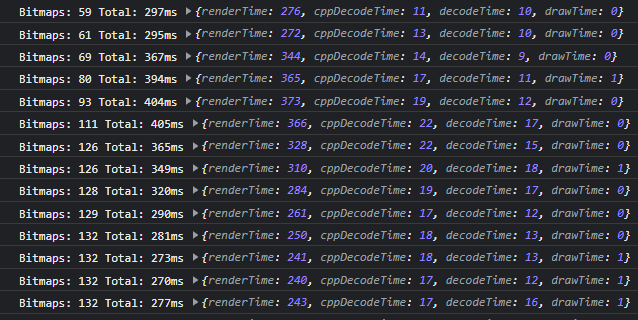

as I already mentioned in #138, most performance lost is in constructing data objects like ImageData and Uint8ClampedArray and converting the bitmaps to images, so strict mode doesn't offer much performance benefits here anyways you can insanely easily minimize the JS overhead to close to 0 by patching the resizable buffer function of EMSC so you don't need to construct the data array, and instead just reference it or if you don't want to implement offscreen rendering just slice the buffer exposed my EMSC and move bitmap conversion to WASM as you can see by: |

|

With strict mode I'm mostly interested in catching otherwise silent bugs early. I would like to ensure there’s no performance penalty; a performance improvement would ofc be a neat side effect. Since my tests had high run-to-run variance and I'm not familiar with benchmarking JS, but you appear to be, it would be reassuring if you could verify there’s no penalty. I don't want to coerce you into anything though, if you are not interested just say so and I'll see what else I can do. |

strict mode only really changes things if there's a JS function called say 100k times in a few seconds, then it might be marginally slower you shouldn't really use strict mode to catch bugs, it won't do that for you, not even in the slightest, from the sounds of it you'll be interested in a linter such as |

It will in some cases, as I can personally account for :) (for example typos in variable assignments not silently creating new global variables)

Exactly, that’s why this isn't the focus of this change.Yet performance degradations (due to additional checks) are undesireable so this must be tested beforehand (i.e. does the better optimisation due to not having to account for some features and side effects outweigh or neutralise the additional checks; or are the additional checks insignificant?) The essence is, it would be really great if you could leverage your js benchmark knowledge and check if this casuses perf degradation (bad), perf improvement or no change (both good). |

|

If you provide me with the built files I could benchmark this, FYI I'm on IRC right now, we can chat there |

Sure, here’s a version of current master (after the build cleanup patches were merged) and one with this patch applied: strict-nonstrict-comp.zip. OffTopic:

I am now, but you aren't anymore ^^`. Fyi, if you plan to use IRC more: there are IRC Bouncers which will let you stay connected and not miss messages even when your client(s) are offline, or alternatively using e.g. a XMPP<->IRC or Matrix<->IRC bridge will also serve as a sort of bouncer. |

|

strict mode was ~1% slower which could be chalked up to measuring error from inaccurate/missing samples |

This will make some types of bugs easier to detect and avoid accidental additions of code not working inside Modules, which always use strict mode. Performance measurements show no relevant performance difference between strict and non-strict mode, so this won't cause regressions in this regard.

|

Thanks! It seems this is good to merge then. |

In my crude and limited tests, I didn't see any relevant performance difference not within run-to-run variance (i.e. also no degradation), but I'm not familiar with benchmarking js.

@ThaUnknown: would you like to take a look and see, if this has a negative, positive or no performance impact?