ReservoirComputing.jl provides an efficient, modular and easy to use implementation of Reservoir Computing models such as Echo State Networks (ESNs). For information on using this package please refer to the stable documentation. Use the in-development documentation to take a look at not yet released features.

ReservoirComputing.jl provides layers,models, and functions to help build and train reservoir computing models. More specifically the software offers

- Base layers for reservoir computing model construction such as

ReservoirChain,Readout,Collect, andESNCell - Fully built models such as

ESN, andDeepESN - 15+ reservoir initializers and 5+ input layer initializers

- 5+ reservoir states modification algorithms

- Sparse matrix computation through SparseArrays.jl

ReservoirComputing.jl can be installed using either of

julia> ] #actually press the closing square brackets

pkg> add ReservoirComputing

or

using Pkg

Pkg.add("ReservoirComputing")To illustrate the workflow of this library we will showcase how it is possible to train an ESN to learn the dynamics of the Lorenz system.

As a general first step wee fix the random seed for reproducibilty

using Random

Random.seed!(42)

rng = MersenneTwister(17)For an autoregressive prediction we need the target data to be one step ahead of the training data:

using OrdinaryDiffEq

#define lorenz system

function lorenz(du, u, p, t)

du[1] = p[1] * (u[2] - u[1])

du[2] = u[1] * (p[2] - u[3]) - u[2]

du[3] = u[1] * u[2] - p[3] * u[3]

end

#solve and take data

prob = ODEProblem(lorenz, [1.0f0, 0.0f0, 0.0f0], (0.0, 200.0), [10.0f0, 28.0f0, 8/3])

data = Array(solve(prob, ABM54(); dt=0.02))

shift = 300

train_len = 5000

predict_len = 1250

#one step ahead for generative prediction

input_data = data[:, shift:(shift + train_len - 1)]

target_data = data[:, (shift + 1):(shift + train_len)]

test = data[:, (shift + train_len):(shift + train_len + predict_len - 1)]We can either use the provided ESN or build one from scratch.

We showcase the second option:

using ReservoirComputing

input_size = 3

res_size = 300

esn = ESN(input_size, res_size, input_size;

init_reservoir=rand_sparse(; radius=1.2, sparsity=6/300),

state_modifiers=NLAT2

)ReservoirComputing.jl builds on Lux(Core), so in order to train the model we first need to instantiate the parameters and the states:

ps, st = setup(rng, esn)

ps, st = train!(esn, input_data, target_data, ps, st)We can now use the trained ESN to forecast the Lorenz system dynamics

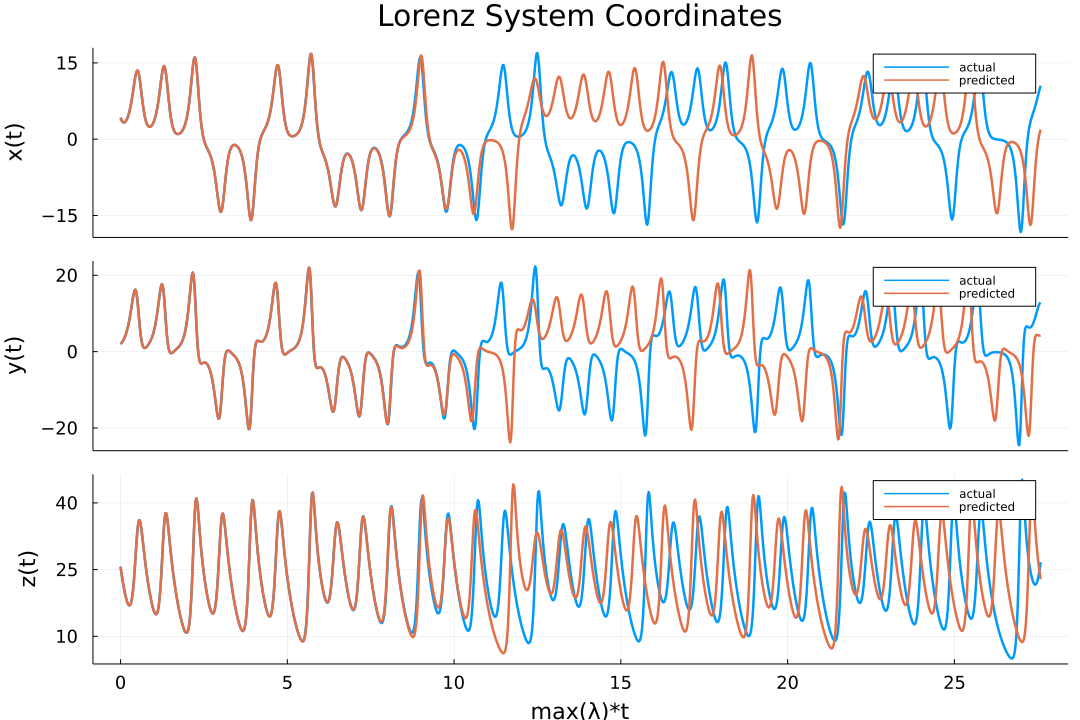

output, st = predict(esn, 1250, ps, st; initialdata=test[:, 1])We can now visualize the results

using Plots

plot(transpose(output); layout=(3, 1), label="predicted");

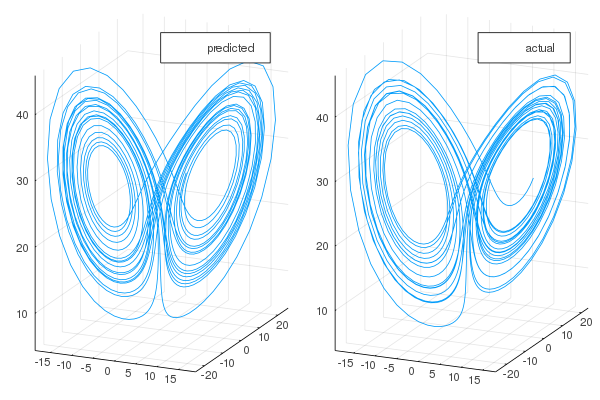

plot!(transpose(test); layout=(3, 1), label="actual")One can also visualize the phase space of the attractor and the comparison with the actual one:

plot(transpose(output)[:, 1],

transpose(output)[:, 2],

transpose(output)[:, 3];

label="predicted")

plot!(transpose(test)[:, 1], transpose(test)[:, 2], transpose(test)[:, 3]; label="actual")If you use this library in your work, please cite:

@article{martinuzzi2022reservoircomputing,

author = {Francesco Martinuzzi and Chris Rackauckas and Anas Abdelrehim and Miguel D. Mahecha and Karin Mora},

title = {ReservoirComputing.jl: An Efficient and Modular Library for Reservoir Computing Models},

journal = {Journal of Machine Learning Research},

year = {2022},

volume = {23},

number = {288},

pages = {1--8},

url = {http://jmlr.org/papers/v23/22-0611.html}

}This project was possible thanks to initial funding through the Google summer of code 2020 program. Francesco M. further acknowledges ScaDS.AI and RSC4Earth for supporting the current progress on the library.